Here's an interesting prototype that combines the Social Computing Room with Max/MSP/Jitter and UbiSense. Video will follow soon.

The Social Computing Room (described here) has many potential applications, and one intriguing use is as an 'interactive media space'. The idea is that 360-degree visuals, combined with various types of sensors, software, and robotics, can create new kinds of experiences. Two examples that I can point to include the 'Spectacular Justice' exhibit that occurred last winter, as well as student work with Max/MSP/Jitter.

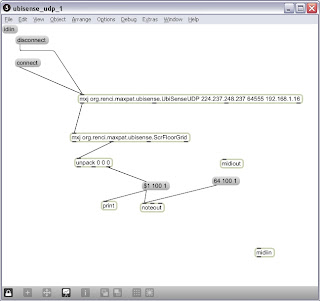

In this case, a prototype was written that uses UbiSense, which provides location tracking in 3D through an active tag. A Max object was written in Java to take the UbiSense location data off of a multicast stream, and push it out into Max-land. A second Max object was created to take the x,y,z data from UbiSense, in meters, and convert it into numbers that match up to the carpet squares on the floor of the Social Computing Room. Given those two new objects, the 'pad' number of the carpet square can be mapped to a MIDI note, and sent out through the Max noteout object.

Here's a picture of a simple patch:

What I'm really trying to show are techniqes for interacting with music and video. I could see using objects in the room that can be arranged to create musical patterns using an arpegiator or a loop player, and this can be combined with video on all four walls. MIDI or other methods can simultaneously control lights and other electronics. You could create a human theramin by having two people move around the room in relation to each other.

It's also interesting to let several people move around in the SCR holding multiple tags, you can create semi-musical patterns by working as a group. It's a fun thing, but points to some interesting possibilities. I've also adapted a Wiimote in the same manner.

No comments:

Post a Comment