Thursday, December 4, 2008

Vid from Supercomputing 08

Also cool to note is the HoloPanel that can be seen in the corner of the booth. A pair of these was used in the Social Computing Room as a part of the Spectacular Justice installation, and they were stunning!

All that said, really this is just to share some cool technology. I'm lucky to work with such smart and resourceful people at Renci.

Monday, November 17, 2008

Renci Multi-Touch blog

My hope is that a touch-table will grace the Social Computer Room. A long-term vision would be to extend our Collage/InfoMesa ideas in the SCR, using the 360-degree display to provide visual real estate. Imagine a group working around a touch table, shooting images out to the 360-degree wall with gestures on the touch table.

Thursday, November 13, 2008

Putting Google Earth into a WPF window

Anyhow, here's the window in all its (yawn) glory:

It was a bit of a slog to get it right, and I'll share the code that worked. First, I had to get Google Earth, which gives you this COM SDK. I did this using C#, Vis Studio 2008. I added a project ref to the COM Google Earth library, and created a class that extended HwndHost.

using System;

using System.Collections.Generic;

using System.Linq;

using System.Text;

using System.Windows;

using System.Windows.Controls;

using System.Windows.Data;

using System.Windows.Documents;

using System.Windows.Input;

using System.Windows.Media;

using System.Windows.Media.Imaging;

using System.Windows.Navigation;

using System.Windows.Shapes;

using System.Windows.Interop;

using System.Runtime.InteropServices;

using EARTHLib;

namespace GeTest

{

class MyHwndHost : HwndHost

{

[DllImport("user32.dll")]

static extern int SetParent(int hWndChild, int hWndParent);

IApplicationGE iGeApp;

[DllImport("user32.dll", EntryPoint = "GetDC")]

public static extern IntPtr GetDC(IntPtr ptr);

[DllImport("user32.dll", EntryPoint = "GetWindowDC")]

public static extern IntPtr GetWindowDC(Int32 ptr);

[DllImport("user32.dll", EntryPoint = "IsChild")]

public static extern bool IsChild(int hWndParent, int hwnd);

[DllImport("user32.dll", EntryPoint = "ReleaseDC")]

public static extern IntPtr ReleaseDC(IntPtr hWnd, IntPtr hDc);

[DllImport("user32.dll", CharSet = CharSet.Auto)]

public extern static bool SetWindowPos(int hWnd, IntPtr hWndInsertAfter, int X, int Y, int cx, int cy, uint uFlags);

[DllImport("user32.dll", CharSet = CharSet.Auto)]

public static extern IntPtr PostMessage(int hWnd, int msg, int wParam, int lParam);

//PInvoke declarations

[DllImport("user32.dll", EntryPoint = "CreateWindowEx", CharSet = CharSet.Auto)]

internal static extern IntPtr CreateWindowEx(int dwExStyle,

string lpszClassName,

string lpszWindowName,

int style,

int x, int y,

int width, int height,

IntPtr hwndParent,

IntPtr hMenu,

IntPtr hInst,

[MarshalAs(UnmanagedType.AsAny)] object pvParam);

readonly IntPtr HWND_BOTTOM = new IntPtr(1);

readonly IntPtr HWND_NOTOPMOST = new IntPtr(-2);

readonly IntPtr HWND_TOP = new IntPtr(0);

readonly IntPtr HWND_TOPMOST = new IntPtr(-1);

static readonly UInt32 SWP_NOSIZE = 1;

static readonly UInt32 SWP_NOMOVE = 2;

static readonly UInt32 SWP_NOZORDER = 4;

static readonly UInt32 SWP_NOREDRAW = 8;

static readonly UInt32 SWP_NOACTIVATE = 16;

static readonly UInt32 SWP_FRAMECHANGED = 32;

static readonly UInt32 SWP_SHOWWINDOW = 64;

static readonly UInt32 SWP_HIDEWINDOW = 128;

static readonly UInt32 SWP_NOCOPYBITS = 256;

static readonly UInt32 SWP_NOOWNERZORDER = 512;

static readonly UInt32 SWP_NOSENDCHANGING = 1024;

static readonly Int32 WM_CLOSE = 0xF060;

static readonly Int32 WM_QUIT = 0x0012;

private IntPtr GEHrender = (IntPtr)0;

private IntPtr GEParentHrender = (IntPtr)0;

internal const int

WS_CHILD = 0x40000000,

WS_VISIBLE = 0x10000000,

LBS_NOTIFY = 0x00000001,

HOST_ID = 0x00000002,

LISTBOX_ID = 0x00000001,

WS_VSCROLL = 0x00200000,

WS_BORDER = 0x00800000;

public ApplicationGEClass googleEarth;

protected override HandleRef BuildWindowCore(HandleRef hwndParent)

{

// start google earth

googleEarth = new ApplicationGEClass();

int ge = googleEarth.GetRenderHwnd();

IntPtr hwndControl = IntPtr.Zero;

IntPtr hwndHost = IntPtr.Zero;

int hostHeight = 200;

int hostWidth = 300;

// create a host window that is a child of this HwndHost. I'll plug this HwndHost class as a child of

// a border element in my WPF app,

hwndHost = CreateWindowEx(0, "static", "",

WS_CHILD | WS_VISIBLE,

0, 0,

hostHeight, hostWidth,

hwndParent.Handle,

(IntPtr)HOST_ID,

IntPtr.Zero,

0);

// set the parent of the Google Earth window to be the host I created here

int oldPar = SetParent(ge, (int) hwndHost);

// check to see if I'm now a child, for my own amusement

if (IsChild(hwndHost.ToInt32(), ge)) {

System.Console.WriteLine("now a child");

}

// return a ref to the hwndHost, which should now be the parent of the google earth window

return new HandleRef(this, hwndHost);

}

protected override void DestroyWindowCore(HandleRef hwnd)

{

throw new NotImplementedException();

}

}

}

The main window of my WPF app, in its constructor for the Window, just plugs this HwndHost as a child of a Border control:

public Window1()

{

InitializeComponent();

MyHwndHost hwndHost = new MyHwndHost();

border1.Child = hwndHost;

}

And you are off to the races! I found a lot of different approaches to this all over the web, but none of them seemed to work, as is often the case. Maybe this will work for you, or maybe it adds to the confusion.

UPDATE: can't run more than one Google Earth, so it's a bust, but still has use in my InfoMesa/Collage project. I wonder about Virtual Earth?

Wednesday, November 12, 2008

Video for Ubisense/MIDI demo

Oh well...off to a Games4Learning event...see ya there!

Monday, November 10, 2008

A bit about the Social Computing Room

The Social Computing Room, (or SCR for short) is a visualization space at the Renci Engagement Center at UNC Chapel Hill. We're over near the hospital in the ITS-Manning building in Chapel Hill. It's one of three spaces, the other being the Showcase Dome (a 5-meter tilted Global Immersion dome), and Teleimmersion, which is a 4K (4 x HD resolution) stereo environment. We're working on some virtual tours for a new web site, so there should be some more info soon on those other spaces.

One of the primary features of the SCR is its 360-degree display. The room is essentially a 12,288x768 Windows desktop. (I've also tested a Mac in this environment, and it works as well). Here's a pic of the SCR...

The room has multiple cameras, wireless mics, multi-channel sound, 3D location tracking for people and objects, and is ultra-configurable (plenty of cat-6 for connecting things, Unistrut ceiling for adding new hardware). The room has so many possibilities that it gets difficult to keep up with all of the ideas. I think of it as a place where you can paint the walls with software, and make it into anything you want. There are currently a few emerging themes:

- The SCR is a collaborative visualization space. The room seems especially suited for groups considering a lot of information, doing comparison, interperetation, and grouping. There is a lot of visual real estate, and the four-wall arrangement seems to lend itself to spatial organization of data. As groups use the space for this purpose, I'm trying to capture how they work, and what they need. The goal is to create a seemless experience for collaboration. This is the reason I've been interested in WPF, and the InfoMesa technology demonstrator, as covered in this previous post.

- The SCR is a new media space. Its been used for art installations, and it has interesting possibilities for all sorts of interactive experiences, as illustrated by this recent experiment.

- The SCR is a place for interacting with the virtual world. We're working on a Second Life client that would have a 360-degree perspective, so that we can embed the SCR inside of a larger virtual enviroment, enabling all sorts of new possibilities.

That's a bit about the SCR, it's a really fascinating environment, and if you are on the UNC campus, give me a shout out and I'll show you around!

Friday, November 7, 2008

Music and Media in the SCR

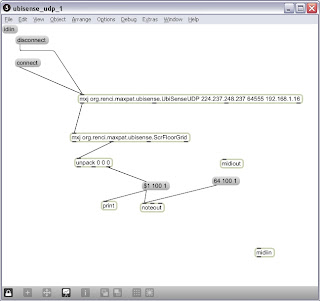

Here's an interesting prototype that combines the Social Computing Room with Max/MSP/Jitter and UbiSense. Video will follow soon.

The Social Computing Room (described here) has many potential applications, and one intriguing use is as an 'interactive media space'. The idea is that 360-degree visuals, combined with various types of sensors, software, and robotics, can create new kinds of experiences. Two examples that I can point to include the 'Spectacular Justice' exhibit that occurred last winter, as well as student work with Max/MSP/Jitter.

In this case, a prototype was written that uses UbiSense, which provides location tracking in 3D through an active tag. A Max object was written in Java to take the UbiSense location data off of a multicast stream, and push it out into Max-land. A second Max object was created to take the x,y,z data from UbiSense, in meters, and convert it into numbers that match up to the carpet squares on the floor of the Social Computing Room. Given those two new objects, the 'pad' number of the carpet square can be mapped to a MIDI note, and sent out through the Max noteout object.

Here's a picture of a simple patch:

What I'm really trying to show are techniqes for interacting with music and video. I could see using objects in the room that can be arranged to create musical patterns using an arpegiator or a loop player, and this can be combined with video on all four walls. MIDI or other methods can simultaneously control lights and other electronics. You could create a human theramin by having two people move around the room in relation to each other.

It's also interesting to let several people move around in the SCR holding multiple tags, you can create semi-musical patterns by working as a group. It's a fun thing, but points to some interesting possibilities. I've also adapted a Wiimote in the same manner.

Tuesday, October 28, 2008

Adapting InfoMesa to the Social Computing Room

InfoMesa is a project to allow scientists to do more science and more discovery in a collaborative and data-rich environment. The metaphor that we have elected to use as the underlying fabric of the InfoMesa is a Whiteboard.

InfoMesa allows any kind of data or visualization to be added to the Whiteboard. Far from static, these tools are interactive, allowing data to be absorbed from data sources like Oracle, SQL Server, Excel Spreadsheets, XML or even Cloud-based web services. InfoMesa, when complete will support imagery, video, 2D connected models, 3D models (lit in a photo realistically manner), web searches, results from web service calls, Image Tile Maps, ScatterPlots, Sticky Notes, Ink Notes, Rich Annotations and Associations.

Check the blog link for screen shots, it's a really interesting application, as well as a nice example of the capabilities of WPF (Windows Presentation Foundation). As a died-in-the-wool Java and Ruby programmer, I don't necessarily fit the typical Microsoft bandwagon profile, but I am having quite good success leveraging the WPF framework for the challenging environment in our Social Computing Room, which is essentially a 12,288 x 768 desktop.

A bit of a rewind, the Social Computing Room is part of the Renci Engagement Center at UNC Chapel Hill. The SCR has a 360-degree visual display running on all four walls, with 12 projectors per wall. The relevant model we were already working on was a supporting environment for researchers working in collaborative groups, and considering LOTS of data at one time. The SCR is a great venue for approaching problems that fit the 'small multiples' mold.

One of my colleagues, Dave Borland, had created a prototype called 'Collage'. This prototype used OpenGL and C++ (including some nifty wxWidgets sleight of hand to allow OpenGL to render such a large visual application). Collage handled cool things like letting the mouse, and any images, wrap all the way around the room. Collage could also play videos, and we were looking at adding other capabilities. Another cool part of Collage was the ability to intelligently 'lay out' images. For example, it was a common activity to expand each image to size to one 'projector', avoiding any stitch lines between displays. We were also working on displaying metadata about the images on command, sorting data various ways, and generally assisting 'small multiple' visualization tasks.

The downside of Collage is that it was a bit hard to extend, requiring a lot of OpenGL and wxWidgets prestidigitation to add new features. There were further plans to add Wiimote integration for multi-user input, and the ability to assign functionality to each wall. E.g., have a magnification wall, where thumbnails that were dragged to the magnification wall would automatically size for comparison.

After seeing InfoMesa in prototype form, I realized that many of the ideas we had in Collage mapped nicely onto the InfoMesa concept, and InfoMesa really moved the ball down the field. The first question I had was whether WPF would support, in a performant manner, a 12,288x768 desktop, and I was pleasantly suprised! The thing I've been working on for the past couple of weeks is taking the InfoMesa code, and adapting it to cover the functionality we already had in the OpenGL Collage prototype. I've been concentrating on the visual interface so far, leaving persistance, annotation, and metadata for later work. Here's a short vid of the CollageWPF adaptation of InfoMesa:

I wanted to hit on a few of the 'features' we've added, some requested by researchers who are using the prototype:

- InfoMesa is turned 'inside out', maximizing real estate. Wrapping controls and toolbars around the whole display doesn't work on a big wall, so I went with a right-click context menu. It would be cool for InfoMesa to expand full desktop or display the interface! Otherwise, it might be cool to concentrate on partitioning the application such that the host 'window' can be easily customized for various display types.

- Ability to automatically lay out and size imagery, which I implemented by creating a SceneManager to describe the environment, and a LayoutManager that can be subclassed for various layouts. The first LayoutManager does a scale and position to get one image per projector. The idea is that SceneManagers could be created for other viz environments, such as a 3x3 viz wall, or a 4K high-def display.

- I Started thinking about a base 'widget' that can be subclassed to create other tools. Here I've still got things to learn about InfoMesa! I also started thinking about how these subclassed widgets would keep and share metadata, and allow the host 'cavas', or 'Universe', to know its widgets, and be able to manipulate them for things like layouts.

- I added a widget to display 'time series' images in a player. It will eventually work by synchronizing multiple 'time series' viewers so researchers can consider different model runs simultaneously. I also added a widget to digest a power point, break into images, and then lay out those images.

- The original InfoMesa zooms the entire desktop. Researchers were really looking to scale individual images.

- I added mouse-over tool tips to display image metadata.

More later....be sure to vote tomorrow. As a political junkie, I'm sure I'll be pretty tired looking on Wednesday morning.

Friday, October 3, 2008

InfoMesa

http://anirbanghoshscinno.spaces.live.com/

Friday, June 27, 2008

Blender -> Panda via Chicken, finally working

I'm really not trying to be a 3D animator, rather learning the mechanics of the animation and art path to better understand game engine development. I'm picking up Blender while picking up Panda. There are alternative methods of doing bone animation in Blender, so you really do have to do it one certain way to get it to work. I won't repeat the instructions, rather link off to the forum post, but this does work using the Chicken exporter plug-in for Blender.

Wednesday, June 25, 2008

Panda3D in a Global Immersion Dome

That said, I thought I'd pass along this tidbit from my latest adventure...

Serious gaming is a hot topic on campus. How can we utilize game engine platforms to create new tools for simulation, interactive visual applications, training, and learning? Marry this with unique assets such as an interactive dome, an ultra-high (4K) resolution stereo environment, and a cool 360-degree 'viz wall', and it gets even more interesting. Over the summer, my group is exploring this intersection of game engines, and unique environments, and reaching out to folks on campus to find like-minded 'explorers'.

The true topic of this post is getting Panda to work in our dome, which turned out to be fairly straight forward. The example I'm using is a bit 'hackey', because it reflects a lot of trial-and-error. Python is great for this fortunately. I'll try and annotate what did the trick, using the baked in Panda and scene you get with the download...

# our dome has a 2800x2100 display, create a window at the 0,0 origin with no borders like so...

from pandac.PandaModules import loadPrcFileData

loadPrcFileData("", """win-size 2800 2100

win-origin 0 0

undecorated 1""")

import direct.directbase.DirectStart

import math

from direct.task import Task

from direct.actor import Actor

from direct.interval.IntervalGlobal import *

from pandac.PandaModules import *

# create a function that sets up a camera to a display region, pointing a given direction

# note that we're essentially creating one borderless window divided into four equal regions.

# each region depicts a camera with a precise eye-point and aspect ratio.

#

# NOTE this geometry is particular to our dome

def createCamera(dispRegion, x, y, z):

camera=base.makeCamera(base.win,displayRegion=dispRegion)

camera.node().getLens().setViewHpr(x, y, z)

camera.node().getLens().setFov(112,86)

return camera

# set the default display region to inactive so we can remake it

dr = base.camNode.getDisplayRegion(0)

dr.setActive(0)

#settings for main cam, which we will not really be displaying. Actually, this code might be

# unnecessary!

base.camLens.setViewHpr(45.0, 52.5, 0)

base.camLens.setFov(112)

# set up my dome-friendly display regions to reflect the dome geometry

window = dr.getWindow()

dr1 = window.makeDisplayRegion(0, 0.5, 0, 0.5)

dr1.setSort(dr.getSort())

dr2 = window.makeDisplayRegion(0.5, 1, 0, 0.5)

dr2.setSort(dr2.getSort())

dr3 = window.makeDisplayRegion(0, 0.5, 0.5, 1)

dr3.setSort(dr3.getSort())

dr4 = window.makeDisplayRegion(0.5, 1, 0.5, 1)

dr4.setSort(dr4.getSort())

# create four cameras, one per region, with the dome geometry. Note that we're not using the

# base cam. I tried this at first, pointing the base cam at region 1. It worked, but it threw the

# geometry off for some reason. The fix was to create four cameras, parent them to the base

# cam, and off we go.

cam1 = createCamera((0, 0.5, 0, 0.5), 45.0, 52.5, 0)

dr1.setCamera(cam1)

cam2 = createCamera((0.5, 1, 0, 0.5), -45.0, 52.5, 0)

dr2.setCamera(cam2)

cam3 = createCamera((0, 0.5, 0.5, 1), 135.0, 52.5, 0)

dr3.setCamera(cam3)

cam4 = createCamera((0.5, 1, 0.5, 1), -135, 52.5, 0)

dr4.setCamera(cam4)

# loading some baked-in model

environ = loader.loadModel("models/environment")

environ.reparentTo(render)

environ.setScale(0.25,0.25,0.25)

environ.setPos(-8,42,0)

cam1.reparentTo(base.cam)

cam2.reparentTo(base.cam)

cam3.reparentTo(base.cam)

cam4.reparentTo(base.cam)

# rest of code follows...this works!

Friday, May 9, 2008

Techno-travels and HASTAC Part II

One can think of a ton of ways to take this sort of thing. There are many examples of using the virtual world as a control panel for real-world devices and sensors, such as the Eolus One project. How can this idea be applied to communication between people, for social applications, etc. What sort of person-to-person interactions between persons in the SCR and remote visitors are possible? I have this idea that virtual visitors would fly in and view the actual SCR from a video wall. Then they could fly through the wall (through the looking glass) to see and communicate with the virtual people as they are arranged in the room. A fun thing we'll using as a demo at HASTAC.

Friday, May 2, 2008

Techno-Travels and HASTAC Part I

I'll be presenting at the HASTAC conference on May 24th at UCLA. The conference has a theme of 'TechnoTravels/TeleMobility: HASTAC in Motion". I'll quote the description of the theme:

This year’s theme is “techno-travels” and explores the multiple ways in which place, movement, borders, and identities are being renegotiated and remapped by new locative technologies. Featured projects will delve into mobility as a modality of knowledge and stake out new spaces for humanistic inquiry. How are border-crossings being re-conceptualized, experienced, and narrated in a world permeated by technologies of mobility? How is the geo-spatial web remapping physical geographies, location, and borderlands? How are digital cities interfacing with physical space? How do we move between virtual worlds? And what has become of sites of dwelling and stasis in a world saturated by techno-travels?

OK...so how do you take a bite out of that apple? In my case, the presentation is going to center on something called the 'Social Computing Room' (SCR), part of visualization center at UNC Chapel Hill. There are lots of different ways to approach the SCR. It's a visualization space for research, it's a canvas for art and new media projects, it's a classroom, a video conference center, a gaming and simulation environment, and it's a physical space that acts as a port between the physical world and the digital world. It's difficult when talking about interesting new ideas to avoid overstating the potential, but I'll try to use the SCR to talk about how physical and digital worlds converge, using the 'port' metaphor. Thinking about the SCR as a port can start by looking at a picture of the space. Now compare that picture with a capture of a virtual version, in this case within Second Life:

To me, the SCR is a port in the sense that it exists in both worlds, and the ongoing evolution of the space will explore the ways these two sides of the coin interact. Before I go there, perhaps a bit more about the HASTAC theme. In this installment, let's talk about borders in a larger sense, coming back to the SCR a bit down the road.

Techno-travels? Borders? Mobility? Borders are falling away in our networked world, this means the borders that exist between geographic places, and the borders between the physical and virtual world. The globe is a beehive of activity, and that activity can be comprehended in real time from any vantage point. A case in point are real time mashups between RSS feeds and Google Maps, such as flickrvision and twittervision. These mashups show uploads of photos to Flickr, and mapping of twitters around the globe. You can watch the action unfold from your desktop, no matter where you are. Borders between places start to disappear as you watch ordinary life unfold across the map, and from this perspective, the physical borders seem to mean less, like the theme song to that old kids show 'Big Blue Marble', if you want to date yourself. Sites like MySpace and Orkut have visitors from all over the world, as illustrated by this ComScore survey, and social networks don't seem to observe these borders either.

The term 'neogeography' was coined by Joab Jackson in National Geographic News, to describe the markup of the world by mashing up mapping with blogs. Sites such as Platial serve as an example of neogeography in action, essentially providing social bookmarking of places. Google Earth is being marked up as well...Using Google Earth and Google Street View, you can see and tag the whole world. Tools like Sketch-up allow you to add 3D models to Google Earth, such as this Manhattan view:

So we're marking up the globe, and moving beyond markup to include 3D modeling. Web2.0 and 'neogeography' add social networking too. At the outset, I also waived my hands a bit at the SCR by comparing real and virtual pictures of this 'port'. That's a bunch of different threads that can be tied together by including some of the observations in an excellent MIT Technology Review article called 'Second Earth'. In that article, Wade Roush looks at virtual worlds such as Second Life, and at Google Earth and asks, "As these two trends continue from opposite directions, it's natural to ask what will happen when Second Life and Google Earth, or services like them, actually meet." Instead of socially marking up the world, the crucial element is the ability to be present at the border between real and virtual, to recognize others who are inhabiting that place at that time, and to connect, communicate, and share experiences in those places. This gets to how I would define the SCR as a port.

The drawback to starting out with this 'Second Earth' model is that it limits the terrain to a recognizable spatial point. While a real place sometimes can serve as a point of reference in the virtual world, that also unnecessarily constrains the meaning. What is an art exhibit? What is a scientific visualization? What is any collection of information? As naturally as we mark up the world, we're also marking up the web, collaborating, and experiencing media in a continuous two-way conversation..that's a lot of what Web2.0 is supposed to be about. How can we create the same joint experience, where we're all present together as our real or virtual selves sharing a common experience? That to me is the central goal of 'techno-travels', and perhaps expands a bit on the idea of border crossing.

Anyhow, I'm trying to come up with my HASTAC presentation, and thinking aloud while I do it.

Tuesday, April 15, 2008

Hands-free control of Second Life

video here...

Monday, April 14, 2008

NetBeans 6.0.1 running like a pig...here's how I fixed it

This is in my netbeans.conf, which should be under Program Files/NetBeans 6.0.1/etc on Windows. The critical change was the memory config:

netbeans_default_options="-J-Dcom.sun.aas.installRoot=\"C:\Program Files\glassfish-v2ur1\" -J-client -J-Xss2m -J-Xms32m -J-XX:PermSize=32m -J-XX:MaxPermSize=200m -J-Xverify:none -J-Dapple.laf.useScreenMenuBar=true"

Now NetBeans is running quite well. I'm hacking some Sun code samples to get data from the accelerometer to build a prototype air mouse. This isn't a standard mouse, but rather a way for multiple users to manipulate visualizations in the Social Computing Room. For grins, here's a shot of the space...

Thursday, April 10, 2008

Note to Self

main.class=org.sunspotworld.demo.TelemetryFrame

#main.args=COM1

#port=COM1

user.classpath=lib/log4j-1.2.15.jar

Of course, now I'll forget that I stuck it in my blog. I'm looking at using the spots to create a multi-user input interface to a 360 degree visualization environment (our Social Computing Room), at least as a proof-of-concept.

Wednesday, March 26, 2008

Android and Agents

Agents to me are the perfect interface between myself, my devices, my environment, and others around me. Agents can also play a part in mediating between my 'personal cloud', and the larger web. This mediation is two way...I may be life-blogging, sending real-time media, location reports, etc. I may also be watching for events, conditions, or proximity.

Anyhow, I am looking at setting up some agents to automate things in the Social Computing Room, so I popped out to the JADE site to see if I had the latest version, to find that they are working on a JADE agent toolkit for the Anderoid platform:

Version 1.0 of JADE-ANDROID, a software package that allows developing agent oriented applications based on JADE for the ANDROID platform, has been released. Android is the software stack for mobile devices including the operating system released by the Open Handset Alliance in November 2007. The possibility of combining the expressiveness of FIPA communication supported by JADE agents with the power of the ANDROID platform brings, in our opinion, a strong value in the development of innovative applications based on social models and peer-to-peer paradigms. See the JADE-ANDROID guide for more details

That looks really interesting, note their (tilab's) own observation about the relation of Android to social network enabled, peer-to-peer applications.

Incidentally, I note that I have crossed the 100th blog post line, so w00t!

Friday, March 14, 2008

Breaking news, Santa Fe blog covers Hillsborough blues jam

Monday, March 3, 2008

Play with a SunSpot without buying a developer kit

http://blogs.sun.com/davidgs/entry/beta_starts

Cloud Computing and MSoft

Tuesday, February 26, 2008

Getting a Ubisense CellData schema

I am working on code based on this forum exchange..., and it seems to be working, though I'm getting sporadic exceptions:

System.NullReferenceException was unhandled

Message="Object reference not set to an instance of an object."

Source="UbisensePlatform"

StackTrace:

at UPrivate.au.a(Byte[] A_0, EndPoint& A_1)

at UPrivate.am.j()

at UPrivate.am.k()

at System.Threading.ThreadHelper.ThreadStart_Context(Object state)

at System.Threading.ExecutionContext.Run(ExecutionContext executionContext, ContextCallback callback, Object state)

at System.Threading.ThreadHelper.ThreadStart()

I think this might be for some underlying thread collision, or a subtle bug in my own code, so I'm trying to add additional instrumentation to my code. The current code is using the multiCell and RemoveObjectLocation. I am still working to debug that, but at the same time, I'm looking at addressing the CellData schema directly, using the remove_object method. I want to see if this clears up the problem.

I'm also doing burn-in testing of the code, right now there's a robot running around the Social Computing Room carrying around a few Ubisense tags to drive the system...quite fun!

Monday, February 25, 2008

Sun Worldwide Education and Research Conference

Sun is moving fast on many fronts in 3D worlds - but

focusing on education. I hear they will have an

important announcement this week at their Worldwide

Education & Research Conference in SF:

http://www.events-at-sun.com/wwerc08/agenda.html

The agenda shows SUN Founder Scott McNealy speaking

wedged between the Immersive Education Forum and Lunch

the second day. I'm GUESSING that this placement is

intentional and hints that Sun has BIG news for

educators interested in immersive environments.

I played a bit with MPK20 (the Sun virtual environment). It still has limited features, but it's open, and among the ones to watch, along with Croquet. I may put up the feed in the Social Computing Room as availability permits, if anyone is interested in viewing it there, let me know.

Other than that, I'm wrestling a bit with UbiSense again!

Wednesday, February 13, 2008

Back to better habits

It has been a busy few months, largely spent putting together talks and demonstrations of the Social Computing Room, as well as the other spaces in the RENCI UNC Chapel Hill Engagement Center.

I'm into a lot of different things right now, which I'll describe in greater detail later. I'm:

- Working more with UbiSense, which is a real-time 3D location sensing system. I've been concerned with bridging UbiSense using their .Net API to something called VRPN, which is an abstraction layer developed in the Computer Science department at UNC Chapel Hill. Eventually, we'll have a full-blown UbiSense server implementation. I'm working on handling button press signals from the tags right now, having some C# fits.

- I began working on a tag ageing service for UbiSense, and ran into some code on the UbiSense forum that got me started (the UbiSense forum has been very helpful).

- I've been learning OpenGL and GLUT, which I'll need as we develop new applications for the Social Computing Room.

- Between VRPN and OpenGL, I'm having to use C++, which I've dabbled with in the past. It's not been my language of choice in the past, but it's becoming more useful.

Geez, that's enough for now.