Here's the video that was described in this post. The point of this experiment was to see if we could reasonably map carpet squares in the Social Computing Room to MIDI notes, and output those notes to the on-board MIDI implementation.

Oh well...off to a Games4Learning event...see ya there!

Showing posts with label ubisense. Show all posts

Showing posts with label ubisense. Show all posts

Wednesday, November 12, 2008

Friday, November 7, 2008

Music and Media in the SCR

UPDATE: delay on getting the video done, should be here by early this week...MC

Here's an interesting prototype that combines the Social Computing Room with Max/MSP/Jitter and UbiSense. Video will follow soon.

The Social Computing Room (described here) has many potential applications, and one intriguing use is as an 'interactive media space'. The idea is that 360-degree visuals, combined with various types of sensors, software, and robotics, can create new kinds of experiences. Two examples that I can point to include the 'Spectacular Justice' exhibit that occurred last winter, as well as student work with Max/MSP/Jitter.

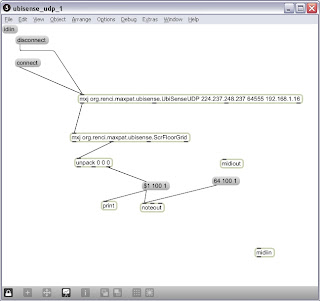

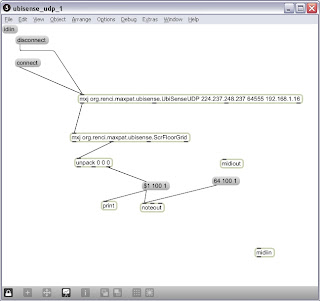

In this case, a prototype was written that uses UbiSense, which provides location tracking in 3D through an active tag. A Max object was written in Java to take the UbiSense location data off of a multicast stream, and push it out into Max-land. A second Max object was created to take the x,y,z data from UbiSense, in meters, and convert it into numbers that match up to the carpet squares on the floor of the Social Computing Room. Given those two new objects, the 'pad' number of the carpet square can be mapped to a MIDI note, and sent out through the Max noteout object.

Here's a picture of a simple patch:

What I'm really trying to show are techniqes for interacting with music and video. I could see using objects in the room that can be arranged to create musical patterns using an arpegiator or a loop player, and this can be combined with video on all four walls. MIDI or other methods can simultaneously control lights and other electronics. You could create a human theramin by having two people move around the room in relation to each other.

It's also interesting to let several people move around in the SCR holding multiple tags, you can create semi-musical patterns by working as a group. It's a fun thing, but points to some interesting possibilities. I've also adapted a Wiimote in the same manner.

Here's an interesting prototype that combines the Social Computing Room with Max/MSP/Jitter and UbiSense. Video will follow soon.

The Social Computing Room (described here) has many potential applications, and one intriguing use is as an 'interactive media space'. The idea is that 360-degree visuals, combined with various types of sensors, software, and robotics, can create new kinds of experiences. Two examples that I can point to include the 'Spectacular Justice' exhibit that occurred last winter, as well as student work with Max/MSP/Jitter.

In this case, a prototype was written that uses UbiSense, which provides location tracking in 3D through an active tag. A Max object was written in Java to take the UbiSense location data off of a multicast stream, and push it out into Max-land. A second Max object was created to take the x,y,z data from UbiSense, in meters, and convert it into numbers that match up to the carpet squares on the floor of the Social Computing Room. Given those two new objects, the 'pad' number of the carpet square can be mapped to a MIDI note, and sent out through the Max noteout object.

Here's a picture of a simple patch:

What I'm really trying to show are techniqes for interacting with music and video. I could see using objects in the room that can be arranged to create musical patterns using an arpegiator or a loop player, and this can be combined with video on all four walls. MIDI or other methods can simultaneously control lights and other electronics. You could create a human theramin by having two people move around the room in relation to each other.

It's also interesting to let several people move around in the SCR holding multiple tags, you can create semi-musical patterns by working as a group. It's a fun thing, but points to some interesting possibilities. I've also adapted a Wiimote in the same manner.

Friday, May 9, 2008

Techno-travels and HASTAC Part II

In brief, here's a demo of a physical/virtual mashup. In this case, UbiSense tracking is used on individuals within a space called the Social Computing Room, and depicted within a virtual representation of the same space.

One can think of a ton of ways to take this sort of thing. There are many examples of using the virtual world as a control panel for real-world devices and sensors, such as the Eolus One project. How can this idea be applied to communication between people, for social applications, etc. What sort of person-to-person interactions between persons in the SCR and remote visitors are possible? I have this idea that virtual visitors would fly in and view the actual SCR from a video wall. Then they could fly through the wall (through the looking glass) to see and communicate with the virtual people as they are arranged in the room. A fun thing we'll using as a demo at HASTAC.

One can think of a ton of ways to take this sort of thing. There are many examples of using the virtual world as a control panel for real-world devices and sensors, such as the Eolus One project. How can this idea be applied to communication between people, for social applications, etc. What sort of person-to-person interactions between persons in the SCR and remote visitors are possible? I have this idea that virtual visitors would fly in and view the actual SCR from a video wall. Then they could fly through the wall (through the looking glass) to see and communicate with the virtual people as they are arranged in the room. A fun thing we'll using as a demo at HASTAC.

Tuesday, February 26, 2008

Getting a Ubisense CellData schema

I posted this on the Ubisense forum...I'm running into many quandries about getting at different parts of the Ubisense architecture. I'm trying to build a service to age out Ubisense tags. The issue is that Ubisense 'remembers' the last sensed location of a tag. This makes sense when the tag is on a pallet in a warehouse, but causes a problem detecting when Elvis has left the building, in other words, when a person walks out of range of the sensors, he's still 'in the building'. This can cause issues depending on the application.

I am working on code based on this forum exchange..., and it seems to be working, though I'm getting sporadic exceptions:

System.NullReferenceException was unhandled

Message="Object reference not set to an instance of an object."

Source="UbisensePlatform"

StackTrace:

at UPrivate.au.a(Byte[] A_0, EndPoint& A_1)

at UPrivate.am.j()

at UPrivate.am.k()

at System.Threading.ThreadHelper.ThreadStart_Context(Object state)

at System.Threading.ExecutionContext.Run(ExecutionContext executionContext, ContextCallback callback, Object state)

at System.Threading.ThreadHelper.ThreadStart()

I think this might be for some underlying thread collision, or a subtle bug in my own code, so I'm trying to add additional instrumentation to my code. The current code is using the multiCell and RemoveObjectLocation. I am still working to debug that, but at the same time, I'm looking at addressing the CellData schema directly, using the remove_object method. I want to see if this clears up the problem.

I'm also doing burn-in testing of the code, right now there's a robot running around the Social Computing Room carrying around a few Ubisense tags to drive the system...quite fun!

I am working on code based on this forum exchange..., and it seems to be working, though I'm getting sporadic exceptions:

System.NullReferenceException was unhandled

Message="Object reference not set to an instance of an object."

Source="UbisensePlatform"

StackTrace:

at UPrivate.au.a(Byte[] A_0, EndPoint& A_1)

at UPrivate.am.j()

at UPrivate.am.k()

at System.Threading.ThreadHelper.ThreadStart_Context(Object state)

at System.Threading.ExecutionContext.Run(ExecutionContext executionContext, ContextCallback callback, Object state)

at System.Threading.ThreadHelper.ThreadStart()

I think this might be for some underlying thread collision, or a subtle bug in my own code, so I'm trying to add additional instrumentation to my code. The current code is using the multiCell and RemoveObjectLocation. I am still working to debug that, but at the same time, I'm looking at addressing the CellData schema directly, using the remove_object method. I want to see if this clears up the problem.

I'm also doing burn-in testing of the code, right now there's a robot running around the Social Computing Room carrying around a few Ubisense tags to drive the system...quite fun!

Thursday, October 11, 2007

Turning Turing Around

I was reading Irving Wladawsky-Berger's blog today when I happened upon this wonderful observation..

I was reminded of the Turing Test recently, as I have been watching the huge progress we are making in social networks, virtual worlds and personal robots. Our objective in these applications can perhaps be viewed as the flip side of the Turing Test. We are leveraging technology to enable real people to infuse virtual objects - avatars, personal robots, etc - with intelligence, - as opposed to leveraging technology to enable machines and software to behave as if they are intelligent.

What intrigues me so much about virtual worlds like Second Life is this ability of avatar-based virtual spaces to allow you to push through the barrier, and cross over. How's that for a bunch of meta-physical BS! This is a different aim then something like Looking Glass, which is trying to apply a 3D metaphor to a 2D interaction...it's about stepping through to live with the data, or the sensors, or the other distant collaborators. As the real world becomes more inhabited by pervasive computing, it only seems natural that we go and visit the virtual on its own turf. One wonders about the definition of an application interface in the future. As machines grow smarter, perhaps we'll pop into the 'living room' of our personal agent to have a chat.

At any rate, there are a couple of fun things I'll be looking at in the near future that can tie in to these ideas. First, the idea of pervasive, wireless sensors everywhere. I'm waiting for a SunSpot Developers Kit, and there will be some sensor applications coming down the pike that could involve these extremely cool sensors. The fact that they use Java is a plus in my book. Needless to say, I'll be brushing up on my J2ME.

The next thing I see coming down the pike is real time location tracking, using the UbiSense platform. This is being leveraged for an intriguing space called a Social Computing Room, and has all sorts of potential uses. Here, I'm going to be doing some .Net programming.

Like the blog quote above, I've had a unique chance to push the physical into the virtual, and with the mentioned projects, there's a chance to work in the other direction. Where these meet is getting to be a pretty interesting space!

I was reminded of the Turing Test recently, as I have been watching the huge progress we are making in social networks, virtual worlds and personal robots. Our objective in these applications can perhaps be viewed as the flip side of the Turing Test. We are leveraging technology to enable real people to infuse virtual objects - avatars, personal robots, etc - with intelligence, - as opposed to leveraging technology to enable machines and software to behave as if they are intelligent.

What intrigues me so much about virtual worlds like Second Life is this ability of avatar-based virtual spaces to allow you to push through the barrier, and cross over. How's that for a bunch of meta-physical BS! This is a different aim then something like Looking Glass, which is trying to apply a 3D metaphor to a 2D interaction...it's about stepping through to live with the data, or the sensors, or the other distant collaborators. As the real world becomes more inhabited by pervasive computing, it only seems natural that we go and visit the virtual on its own turf. One wonders about the definition of an application interface in the future. As machines grow smarter, perhaps we'll pop into the 'living room' of our personal agent to have a chat.

At any rate, there are a couple of fun things I'll be looking at in the near future that can tie in to these ideas. First, the idea of pervasive, wireless sensors everywhere. I'm waiting for a SunSpot Developers Kit, and there will be some sensor applications coming down the pike that could involve these extremely cool sensors. The fact that they use Java is a plus in my book. Needless to say, I'll be brushing up on my J2ME.

The next thing I see coming down the pike is real time location tracking, using the UbiSense platform. This is being leveraged for an intriguing space called a Social Computing Room, and has all sorts of potential uses. Here, I'm going to be doing some .Net programming.

Like the blog quote above, I've had a unique chance to push the physical into the virtual, and with the mentioned projects, there's a chance to work in the other direction. Where these meet is getting to be a pretty interesting space!

Labels:

second life,

sunspot,

ubiquitous computing,

ubisense

Subscribe to:

Posts (Atom)