Friday, June 5, 2009

Come into my office

While I'm pimping...here's my 'other life', my band Good Rocking Sam.

Monday, June 1, 2009

Updates

The Social Computing Room at RENCI has been busy...

- I'm working on a new media projects that uses Max/MSP/Jitter to create MIDI music using tangible objects with embedded UbiSense tags as the 'instrument'.

- I'm working on installing a very cool Flash/Flex based media project that uses all four surfaces of the Social Computing Room. This work has been installed elsewhere, so it's an adaptation. It really looks cool! This has allowed me to learn a bit more about Flash and Flex. As a programmer, I 'get' Flex much better than I get Flash.

- I'm working on a virtual worlds project, utilizing a 360-degree Second Life client to stage mock trials.

I've been working mostly on the back-end, creating a services layer for storing metadata (the part I'm working on now) and for accessing arbitrary data stores based on the metadata. The metadata service layer is pluggable by interface, and my first implementation uses NHibernate to store metadata on a back end server. Once this done, that metadata layer can have pluggable modules for things like cloud databases.

For the pluggable data stores, the first stores will probably be a mounted file system, then a database, then an iRods repository. After this, in order, it will probably be http, ftp, then cloud databases.

The Social Computing Room will be integrating a multi-touch table later in the summer, and therefore I'm kicking some of the user interface stuff down the road. I want to allow folks to sit at the multi-touch table and interact with arbitrary data stores, manipulating on the touch table, and viewing on the 360-degree display. This would be soooooo cool.

Serious Games

I'm learning about the Unity3d game engine in my 'spare cycles'. We've ported a few Unity projects to the dome, and blogged about it.

Lots of things going on, as you can see. Main technologies I've worked with in the last two months:

- .Net, C#, WPF

- Java, including some Jetty work, and some socket stuff.

- Max/MSP patches

- Flex and Flash development

- Wordpress and a bit of PHP

- Quicktime Streaming Server and Quicktime Broadcaster

- Unity3D

- Second Life building and LSL scripting.

Thursday, January 29, 2009

Sitting on Top of the (virtual) world

The idea is to embed the Social Computing Room within a larger virtual space, so that you can look out in every direction to see what is happening in the virtual world. In the pic below, I'm in the SCR looking at my avatar...

How about looking in? We have video cameras from all angles, so I thought about building a 'fish tank' from the SL perspective so that avatars can walk around the SCR and see inside of it. The bread and butter of two-way audio and video, along with text chat is the obvious next step. Here's a shot from SL looking into the Social Computing Room...

Right now it's just a bare media object, and one interesting question I have is what to build on the virtual side? Do I want to mirror the room, or perhaps the SCR could be sitting on the bottom of the sea? Once the basics are tackled, the SCR and the 4-channel Second Life client make for a unique research space. The SCR is well suited for installation of all sorts of sensors, robotics, and input devices. This is something of a side project, but I've noted how intrigued people are when they visit the SCR and see the first prototypes!

Monday, November 17, 2008

Renci Multi-Touch blog

My hope is that a touch-table will grace the Social Computer Room. A long-term vision would be to extend our Collage/InfoMesa ideas in the SCR, using the 360-degree display to provide visual real estate. Imagine a group working around a touch table, shooting images out to the 360-degree wall with gestures on the touch table.

Wednesday, November 12, 2008

Video for Ubisense/MIDI demo

Oh well...off to a Games4Learning event...see ya there!

Monday, November 10, 2008

A bit about the Social Computing Room

The Social Computing Room, (or SCR for short) is a visualization space at the Renci Engagement Center at UNC Chapel Hill. We're over near the hospital in the ITS-Manning building in Chapel Hill. It's one of three spaces, the other being the Showcase Dome (a 5-meter tilted Global Immersion dome), and Teleimmersion, which is a 4K (4 x HD resolution) stereo environment. We're working on some virtual tours for a new web site, so there should be some more info soon on those other spaces.

One of the primary features of the SCR is its 360-degree display. The room is essentially a 12,288x768 Windows desktop. (I've also tested a Mac in this environment, and it works as well). Here's a pic of the SCR...

The room has multiple cameras, wireless mics, multi-channel sound, 3D location tracking for people and objects, and is ultra-configurable (plenty of cat-6 for connecting things, Unistrut ceiling for adding new hardware). The room has so many possibilities that it gets difficult to keep up with all of the ideas. I think of it as a place where you can paint the walls with software, and make it into anything you want. There are currently a few emerging themes:

- The SCR is a collaborative visualization space. The room seems especially suited for groups considering a lot of information, doing comparison, interperetation, and grouping. There is a lot of visual real estate, and the four-wall arrangement seems to lend itself to spatial organization of data. As groups use the space for this purpose, I'm trying to capture how they work, and what they need. The goal is to create a seemless experience for collaboration. This is the reason I've been interested in WPF, and the InfoMesa technology demonstrator, as covered in this previous post.

- The SCR is a new media space. Its been used for art installations, and it has interesting possibilities for all sorts of interactive experiences, as illustrated by this recent experiment.

- The SCR is a place for interacting with the virtual world. We're working on a Second Life client that would have a 360-degree perspective, so that we can embed the SCR inside of a larger virtual enviroment, enabling all sorts of new possibilities.

That's a bit about the SCR, it's a really fascinating environment, and if you are on the UNC campus, give me a shout out and I'll show you around!

Friday, November 7, 2008

Music and Media in the SCR

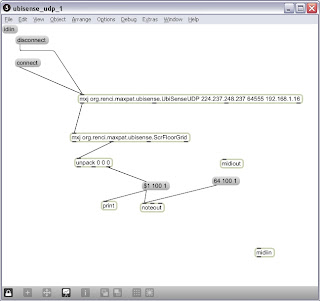

Here's an interesting prototype that combines the Social Computing Room with Max/MSP/Jitter and UbiSense. Video will follow soon.

The Social Computing Room (described here) has many potential applications, and one intriguing use is as an 'interactive media space'. The idea is that 360-degree visuals, combined with various types of sensors, software, and robotics, can create new kinds of experiences. Two examples that I can point to include the 'Spectacular Justice' exhibit that occurred last winter, as well as student work with Max/MSP/Jitter.

In this case, a prototype was written that uses UbiSense, which provides location tracking in 3D through an active tag. A Max object was written in Java to take the UbiSense location data off of a multicast stream, and push it out into Max-land. A second Max object was created to take the x,y,z data from UbiSense, in meters, and convert it into numbers that match up to the carpet squares on the floor of the Social Computing Room. Given those two new objects, the 'pad' number of the carpet square can be mapped to a MIDI note, and sent out through the Max noteout object.

Here's a picture of a simple patch:

What I'm really trying to show are techniqes for interacting with music and video. I could see using objects in the room that can be arranged to create musical patterns using an arpegiator or a loop player, and this can be combined with video on all four walls. MIDI or other methods can simultaneously control lights and other electronics. You could create a human theramin by having two people move around the room in relation to each other.

It's also interesting to let several people move around in the SCR holding multiple tags, you can create semi-musical patterns by working as a group. It's a fun thing, but points to some interesting possibilities. I've also adapted a Wiimote in the same manner.

Tuesday, October 28, 2008

Adapting InfoMesa to the Social Computing Room

InfoMesa is a project to allow scientists to do more science and more discovery in a collaborative and data-rich environment. The metaphor that we have elected to use as the underlying fabric of the InfoMesa is a Whiteboard.

InfoMesa allows any kind of data or visualization to be added to the Whiteboard. Far from static, these tools are interactive, allowing data to be absorbed from data sources like Oracle, SQL Server, Excel Spreadsheets, XML or even Cloud-based web services. InfoMesa, when complete will support imagery, video, 2D connected models, 3D models (lit in a photo realistically manner), web searches, results from web service calls, Image Tile Maps, ScatterPlots, Sticky Notes, Ink Notes, Rich Annotations and Associations.

Check the blog link for screen shots, it's a really interesting application, as well as a nice example of the capabilities of WPF (Windows Presentation Foundation). As a died-in-the-wool Java and Ruby programmer, I don't necessarily fit the typical Microsoft bandwagon profile, but I am having quite good success leveraging the WPF framework for the challenging environment in our Social Computing Room, which is essentially a 12,288 x 768 desktop.

A bit of a rewind, the Social Computing Room is part of the Renci Engagement Center at UNC Chapel Hill. The SCR has a 360-degree visual display running on all four walls, with 12 projectors per wall. The relevant model we were already working on was a supporting environment for researchers working in collaborative groups, and considering LOTS of data at one time. The SCR is a great venue for approaching problems that fit the 'small multiples' mold.

One of my colleagues, Dave Borland, had created a prototype called 'Collage'. This prototype used OpenGL and C++ (including some nifty wxWidgets sleight of hand to allow OpenGL to render such a large visual application). Collage handled cool things like letting the mouse, and any images, wrap all the way around the room. Collage could also play videos, and we were looking at adding other capabilities. Another cool part of Collage was the ability to intelligently 'lay out' images. For example, it was a common activity to expand each image to size to one 'projector', avoiding any stitch lines between displays. We were also working on displaying metadata about the images on command, sorting data various ways, and generally assisting 'small multiple' visualization tasks.

The downside of Collage is that it was a bit hard to extend, requiring a lot of OpenGL and wxWidgets prestidigitation to add new features. There were further plans to add Wiimote integration for multi-user input, and the ability to assign functionality to each wall. E.g., have a magnification wall, where thumbnails that were dragged to the magnification wall would automatically size for comparison.

After seeing InfoMesa in prototype form, I realized that many of the ideas we had in Collage mapped nicely onto the InfoMesa concept, and InfoMesa really moved the ball down the field. The first question I had was whether WPF would support, in a performant manner, a 12,288x768 desktop, and I was pleasantly suprised! The thing I've been working on for the past couple of weeks is taking the InfoMesa code, and adapting it to cover the functionality we already had in the OpenGL Collage prototype. I've been concentrating on the visual interface so far, leaving persistance, annotation, and metadata for later work. Here's a short vid of the CollageWPF adaptation of InfoMesa:

I wanted to hit on a few of the 'features' we've added, some requested by researchers who are using the prototype:

- InfoMesa is turned 'inside out', maximizing real estate. Wrapping controls and toolbars around the whole display doesn't work on a big wall, so I went with a right-click context menu. It would be cool for InfoMesa to expand full desktop or display the interface! Otherwise, it might be cool to concentrate on partitioning the application such that the host 'window' can be easily customized for various display types.

- Ability to automatically lay out and size imagery, which I implemented by creating a SceneManager to describe the environment, and a LayoutManager that can be subclassed for various layouts. The first LayoutManager does a scale and position to get one image per projector. The idea is that SceneManagers could be created for other viz environments, such as a 3x3 viz wall, or a 4K high-def display.

- I Started thinking about a base 'widget' that can be subclassed to create other tools. Here I've still got things to learn about InfoMesa! I also started thinking about how these subclassed widgets would keep and share metadata, and allow the host 'cavas', or 'Universe', to know its widgets, and be able to manipulate them for things like layouts.

- I added a widget to display 'time series' images in a player. It will eventually work by synchronizing multiple 'time series' viewers so researchers can consider different model runs simultaneously. I also added a widget to digest a power point, break into images, and then lay out those images.

- The original InfoMesa zooms the entire desktop. Researchers were really looking to scale individual images.

- I added mouse-over tool tips to display image metadata.

More later....be sure to vote tomorrow. As a political junkie, I'm sure I'll be pretty tired looking on Wednesday morning.

Monday, April 14, 2008

NetBeans 6.0.1 running like a pig...here's how I fixed it

This is in my netbeans.conf, which should be under Program Files/NetBeans 6.0.1/etc on Windows. The critical change was the memory config:

netbeans_default_options="-J-Dcom.sun.aas.installRoot=\"C:\Program Files\glassfish-v2ur1\" -J-client -J-Xss2m -J-Xms32m -J-XX:PermSize=32m -J-XX:MaxPermSize=200m -J-Xverify:none -J-Dapple.laf.useScreenMenuBar=true"

Now NetBeans is running quite well. I'm hacking some Sun code samples to get data from the accelerometer to build a prototype air mouse. This isn't a standard mouse, but rather a way for multiple users to manipulate visualizations in the Social Computing Room. For grins, here's a shot of the space...